The Future Is a Myriad of Blockchain Clients

I work for Parity Technologies, and we build blockchain clients. Blockchains are fascinating in many ways, not least from a software engineering perspective. Working on a blockchain client means you interact with all levels of the software stack from low to high. On the lowest level you’re doing assembly optimization, writing compilers and VMs. You work your way through networking and databases. Finally at the highest level you deal with things like open-source organization and how to organize code in a massive complex application.

What I want to talk about today is on that higher level, how can we manage the complexity of that application?

I want to add a little primer on blockchain clients and how they interact in the ecosystem over time. A blockchain (usually) starts as a protocol, specified by some spec. Bitcoin started with a paper, followed by the C++ implementation. This C++ implementation has since become the spec of the protocol and true multi-client ecosystem was never promoted. In blockchains like Ethereum, there were multiple clients essentially from Day 1. There is some sort of community consensus around the Yellow Paper and other specifications on what the protocol should be and not defined by any one implementation. But what this doesn’t take into consideration is what type of clients can or should exist.

I have mainly worked on Ethereum so I will talk mostly out of that perspective. Early on in a blockchains life, it’s fine that there is one type of client that does everything and that’s what everyone runs. The chain and state is small enough that syncing isn’t a problem and there’s almost no resource usage. Everyone runs a full node so light clients aren’t necessary and there isn’t a big service industry around the chain that have differing needs from a client. As the chain grows up and that ecosystem does too, it actually doesn’t make sense anymore to have one piece of software that does everything. Something we learnt early on in Ethereum was that the node shouldn’t be responsible for managing private keys. You have a piece of network-connected software that also manages private key material, that’s just a recipe for disaster (and many of those disasters have happened).

As we grow further I believe we will see a myriad of specialized clients appear. An Ethereum client is, among other things, responsible for:

- allowing users to interact with the network as a light client (not storing the full state)

- storing the full state and all history (a full node)

- have the ability to create custom indexes and data-sets to inspect past states (an archive node)

- serve requests for data from the node over JSON-RPC

- serve data to mining software

- propagate blocks and transactions

The problem here is that in a mature ecosystem, there is almost no user that wants to do all these things.

How does this impact software complexity?

Building a blockchain client that does all this is a massive task that takes several years of engineering effort. The Parity Ethereum repository is 153kloc of dense Rust code, but that doesn’t count all the libraries to manage merkle tries and other things we’ve extracted and use as dependencies. In total I would estimate a client to be roughly 500kloc of Rust code. But lines of code is not the best signal of complexity. Being an open source project, we see people try to join the project either inside or outside the company. By experience it takes roughly 3 months to become productive on the codebase, and then you will still definitely not have grokked it all. In total I would say there exist at most 10 people in the world who understand every aspect of an Ethereum client. Bitcoin arguably has it worse because there is only one implementation. We built a Bitcoin client and the number of strange corner cases and bugs that exist in the C++ implementation is surprising. Because there aren’t multiple (active) implementations, the bugs were never caught and so have become part of what Bitcoin is now.

So what can we do about this?

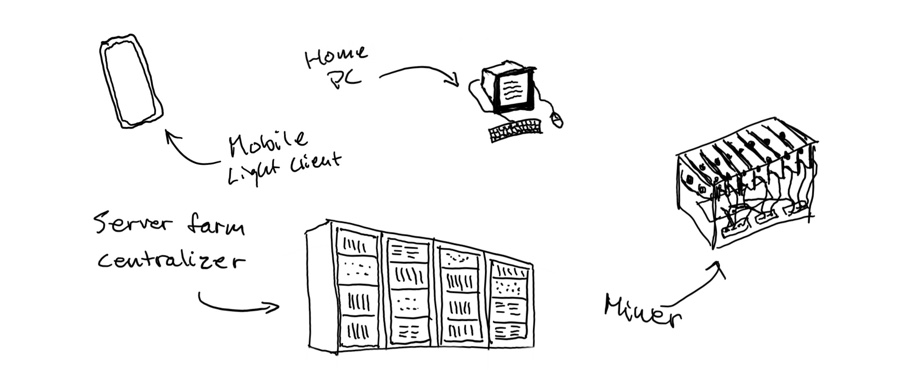

I believe in a future, when blockchains are commonplace and the ecosystems are more mature, where there isn’t a single client that does all these things. It’s quite likely that miners will be running one type of client, archiving services like block explorers will run another, and end-users will run light clients (probably in a mobile app). More roles on the network will be introduced for those who want to make money by serving data to those light clients, or by storing historical block data.

There are two main arguments for why this will happen. The first is that it’s the only way to deal with the complexity and scale core development, which is a necessity if we’re going to have a well-functioning and mature ecosystem. The second is economic. In neither Ethereum nor Bitcoin is it currently possible for a core developer to make money. It’s a typical “Tragedy of the commons” situation where everyone is using this software but no one feels responsible for it, or especially, paying for it. I don’t think we can get away from this commons problem unless we specialize and start introducing economic models in the usage of the software itself. Because everything is open source software you will always be able to remove any economic model that’s hard-coded in, but I don’t see this as a problem.

From what I’ve been able to observe across many ecosystems today, Core Development is funded by consulting work and grants from generous donors. This is not sustainable. For most Core Development teams, going into consulting is distracting and not what they want to work on. This is where specialized blockchain clients could come into play.

Types of blockchain clients and how they could make money

The miners client

Miners don’t feel like they should have to pay for a blockchain client because it’s open source code that benefits everyone, but what if there was a blockchain client that specialized at serving miners use-cases? This client could have a little flag that allowed you to set a contribution rate and have a default of something like $1/block mined. If Parity had received $1/block mined with a Parity client from the Ethereum main-net, we would’ve made more money from that than any commercial project and grant we’ve received combined. More-over it would give us a sustainable and predictable source of revenue as long as our client is used and valued. The problem here is we would likely become so much better for miners that we would out-compete the other clients who are trying to optimize for everyone. The benefits of a multi-client ecosystem as it pertains to consensus is lost.

If you’re focusing on the mining use-case, you can make fundamental changes to how your node operates. You can manage the merkle-trie completely differently. Instead of needing to access data through the trie for syncing or serving light clients, you just need it to produce a merkle-root. You can have completely different assumptions on resource usage. It’s fine to tell a miner they have to have 32gb RAM, where it’s not fine to tell a light-client user that. You wouldn’t have to manage RPCs at all and can remove the entire complexity for that. The miner needs to get work and report mined blocks back, that’s 2 endpoints. You could increase your competitiveness by including mining pool software, tools to deploy stratum servers and much more. None of this is relevant to the average user and doesn’t belong in a “for-all” blockchain client.

To work around the problem of miners all using the same software, you could scale the rewards based on the distribution of mining software, encouraging more participants, or there are no rewards for anyone. There’s other clever economic games you could play here, but they would all require very complicated protocol-level changes that may have other side-effects. It is not clear to me yet how to combat this issue other than through hoping that economic competition on the market works against it.

The light client

A light client needs a way to interact with the network to request data and it needs to verify merkle proofs and PoW hashes, then it needs a set of RPC methods to be able to communicate that to the application using the client, be it a DApp or a wallet. Conceptually this is a lot simpler than anything else. In practice there is a lot of overlap with a full-node on RPC, but we will get to this in a moment. The problem with light clients is that they currently depend on altruistic behavior. No one has any reason to serve a light-client. It doesn’t strengthen the network (which is why you want to propagate blocks and transactions) so you have no real reason of doing it. In an ideal world, a light-client user would open a payment channel with a light-client server and send a micropayment to them for each request they make. With this you could also make the light client open a channel to the software maker and send 1/1000th of that micropayment (or whatever an appropriate rate is, possibly also chosen by the user) to the software maker. Assuming a large and healthy ecosystem of millions of mobile light-client users, this could amount to quite significant revenue.

The full node

The full node would still be the most complex software to write and maintain, as it needs to do the most. In a completely split out environment, there are still things they don’t have to do though. They don’t have to be able to be a light client. They don’t have to deal with mining and block production.

They need to be able to send, receive, and validate data on the network. They also need to be able to serve light-clients. They could get paid by light clients for serving them data. Like for the light-client, the software maker could take an (always optional) cut of profits received. One could imagine removing a large chunk of complexity by taking away the RPC interface. There would be essentially no way to interact with the node other than through a light client. This may have adverse side-effects on performance though (especially for DApps not optimized for light clients). Power-users may want to be able to interact directly with a full node for the best performance. However, it would be simpler to add a “local” (e.g. IPC or similar) interface between the light client and the full node, so if you’re a power-user you would run both, and the light client is still wholly responsible for the complexity of the RPC interfaces.

There is also something to be say for simplifying the interfaces and come up with a better structure around something like GraphQL generation that could work both “client-side” (between user and node) and “server-side” (between nodes).

The archive node

The archive node is an interesting case because it serves no purpose on the network and is very often conflated with a full node. It deals with a lot of extra complexity because it comes with so much higher resource usage. Bitcoin doesn’t have an equivalent of an archive node, but block explorers still need to build their own. I have proposed in the past to remove archive nodes from the main client and let whoever uses it build their own versions. This is obviously not popular with those who depend on the archive node software today.

An archive node is a full node that also has some extra hooks for getting historic data quickly, building additional indexes and storing intermediate states. There is an opportunity here for the person who is building the full node described above to add “hooks” to be able to do things like “send all processed transactions to an Elasticsearch backend”. Archival services are getting more and more sophisticated and I’m now seeing services pop up that don’t use the built-in archive mode at all. They use the RPCs of a full-node to pull out data and shove it into Kafka or something similar for processing.

One can imagine a separate piece of software that interacts with the network to get the data. It would then add the flexibility for external indexers and data-sources to pick up that data and do their own processing. It could also be implemented as the described hooks on the full-node.

In either case it’s something that’s very hard to charge for since there are no on-chain transactions or off-chain payments taking place. I believe a good piece of archiving software would make a great case for closed source proprietary software, since it is essentially just an analytics tool.

There are many consumers of this type of software. Block explorers, the Infura’s of the blockchain world who serve data to end-users without going through the network, the analysts and researchers of the world who study behavior or transaction patterns of a chain. They all have different needs but are best tailored to by building software that hooks into existing databases and tools, doing things that again aren’t needed from anyone but them.

The historical archivist

This is a role that doesn’t exist yet, but I think is why a lot of people confuse an archive node with something else. In Ethereum, a full node stores all historic data, but it doesn’t need to. It actually doesn’t need to store more than the last ~100 blocks for finality purposes. Removing this could save something like 100gb from the storage requirements of a full node. Currently there is no way to remove that and ensure data availability of that historical data. There has been some discussion around putting it on IPFS and pinning that data from several people who have an interest to do so. Then of course we’re in the same boat as a light client with needing altruistic actors. The ideal would be if we could come up with an incentive structure to store this historical data such that there are at least some economic guarantees that it will be available. One could imagine using some interoperability network to store it on one of the many blockchains who tailors to storing large amounts of data for a long time. We could then solve this problem and remove the necessity for all full nodes to store all history. The problem is coming up with who would be paying for it, since again it is no single entities responsibility to make sure the data is available. It would have to be paid through inflation, requiring a difficult and possibly contentious consensus change.

The future

I personally believe the future of blockchain is Proof of Stake (PoS). That isn’t to say Proof of Work (PoW) will go away, PoW is a marvelous invention and Bitcoin will use it forever. New blockchains will be PoS and possibly use PoW here and there for specialized sybil-resistance, or in things like VDFs or VRFs (which I classify as a subset of PoW).

We have seen some attempts at PoS in the blockchain world already but they are usually rather simplistic. In more complex designs of PoS we already see the tendency to break the protocol into many new roles. There are validators instead of miners but sometimes you have nominators or delegators. In things like bitcoin we’re seeing a whole host of new roles crop up on layer 2 with lightning network watch towers and whatnot.

I believe the above layout works equally well for a PoS world. The validator has completely different interests and requirements than the full node. The full node again does something very different than a light client.

When first building out the software, it is much easier to build everything as a monolith, and the few people that understand the whole design can do everything in one place and make sure everything is coherent and works from day 1. If you were to try to build this split up architecture from day 1 I’m almost entirely sure you would be shooting yourself in the foot. We’re about 5 years into Ethereum and we’re just now starting to clearly see these users and their needs. No one could have predicted on day 1 that almost the entire Ethereum user base would interact with the network through a centralized service. In another 5 or 10 years time, I think we’ll be seeing the rise of these specialized clients for all the next-generation blockchains pop up.

The question is if we’ll start seeing it for Bitcoin and Ethereum as they are today. I’m skeptical because of the amount of work involved, but the promise of being able to make money might encourage more people to participate.